Auto-Generated Pop Quizzes: Real-Time Assessment from Live Lectures

In a 200-student lecture on recursive algorithms, an instructor pauses after explaining base cases and recursion depth. Gauging comprehension across the entire class presents a familiar challenge: raised hands come from self-selected students, nodding heads may indicate understanding or polite attention, and verbal responses reveal little about the silent majority.

Traditional formative assessment tools offer partial solutions. Clicker questions provide binary feedback but miss nuanced misconceptions. Minute papers arrive too late for in-class adjustment. Open-ended questions elicit richer responses, but manually creating relevant questions while teaching—and processing hundreds of answers in real time—has been impractical.

Recent developments in speech recognition and natural language processing shift this calculus. Systems can now continuously monitor lecture audio, analyze content for key concepts, and generate contextually relevant questions with a single button press. The entire process—from lecture content to deployed quiz to analyzed results—occurs within minutes, providing actionable comprehension data while the material remains fresh.

The Listening Mechanism

The system operates on a continuous capture model. During class, the instructor’s audio is transcribed in real time, creating a running record of lecture content. Natural language processing identifies topic boundaries, key concepts, and pedagogical transitions. The system builds a semantic map of what has been taught, when, and in what context.

This differs fundamentally from pre-planned assessment. Rather than requiring instructors to anticipate which concepts will need reinforcement and manually craft questions in advance, the system responds to what was actually taught. If an instructor spends twenty minutes on recursion edge cases because student questions indicated confusion, the generated quiz reflects that emphasis. If a planned topic is covered quickly because students demonstrate rapid comprehension, the quiz adapts accordingly.

The technical architecture is straightforward:

┌─────────────────┐ ┌──────────────────┐ ┌────────────────────┐

│ Instructor │───▶│ Kai Captures │───▶│ Content Analysis │

│ Teaching │ │ Lecture Audio │ │ & Concept Mapping │

└─────────────────┘ └──────────────────┘ └────────────────────┘

│

┌─────────────────────────────┘

▼

┌──────────────────────┐

│ Semantic Database: │

│ - Key concepts │

│ - Time segments │

│ - Topic boundaries │

│ - Emphasis patterns │

└──────────────────────┘

The instructor teaches. The system listens. A database of lecture content builds continuously, ready for instant quiz generation when needed.

One-Click Quiz Generation

The quiz creation workflow is deliberately simple. When the instructor reaches a natural break—mid-lecture, during a pause, or at the end of class—a single button press triggers automated question generation. The system reviews the previous 10-20 minutes of lecture content, identifies the key concepts presented, and generates 5-10 questions directly tied to the material.

The process occurs entirely automatically:

┌─────────────────┐ ┌──────────────────┐ ┌────────────────────┐

│ Instructor │───▶│ Quiz Generated │───▶│ Push to All │

│ Clicks Button │ │ from Lecture │ │ Student Devices │

└─────────────────┘ └──────────────────┘ └────────────────────┘

│

┌──────────────────────────────────────────────────┘

▼

┌──────────────────────────────────────────────────────────────────┐

│ Auto-Generated Quiz Content │

├────────────────────────┬─────────────────────────────────────────┤

│ Multiple Choice │ Conceptual understanding │

│ Short Answer │ Deeper comprehension │

│ True/False │ Quick knowledge checks │

│ Default: 15 minutes │ Timed response with countdown │

└────────────────────────┴─────────────────────────────────────────┘

No manual question writing. No advance preparation. The instructor teaches, the system captures content, and one button press deploys a contextually relevant assessment.

The Creation-to-Results Workflow

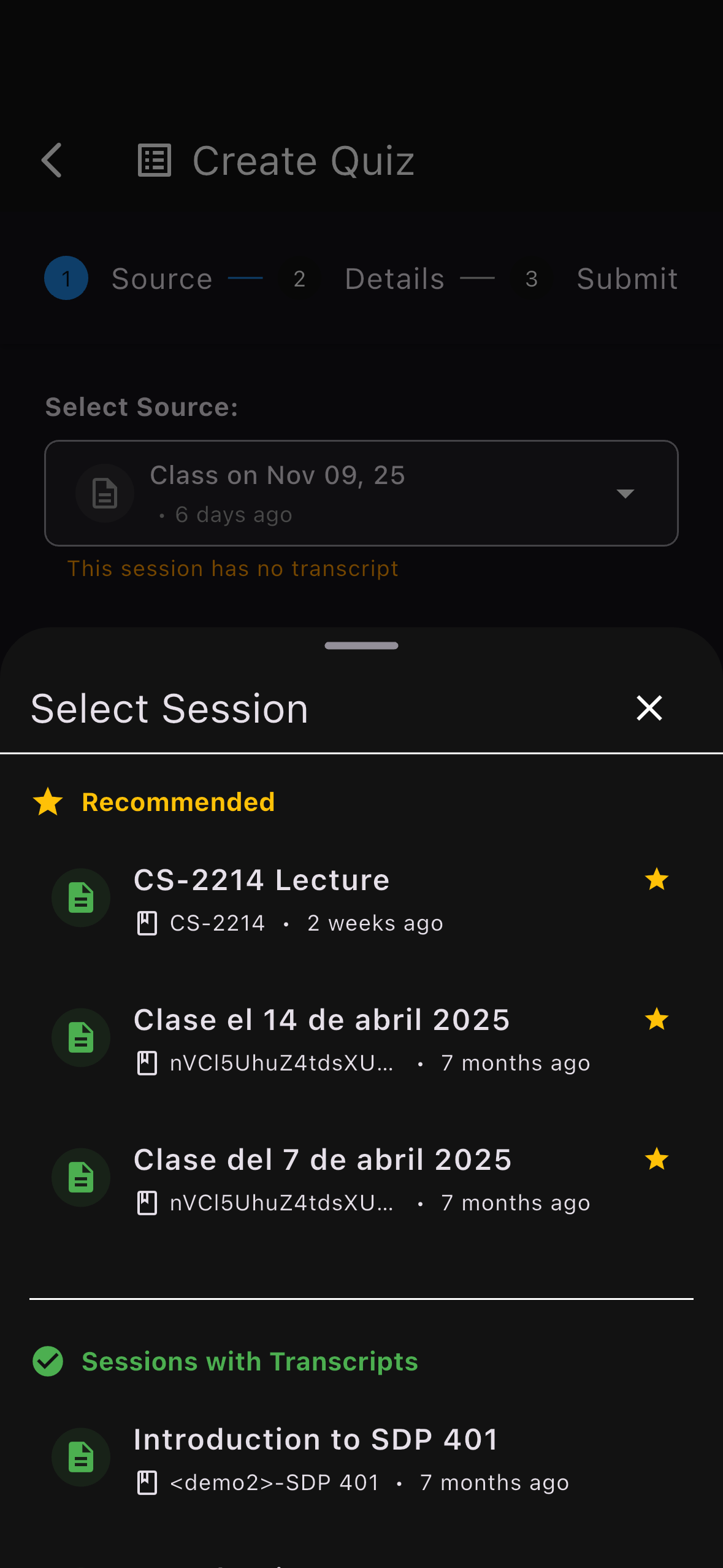

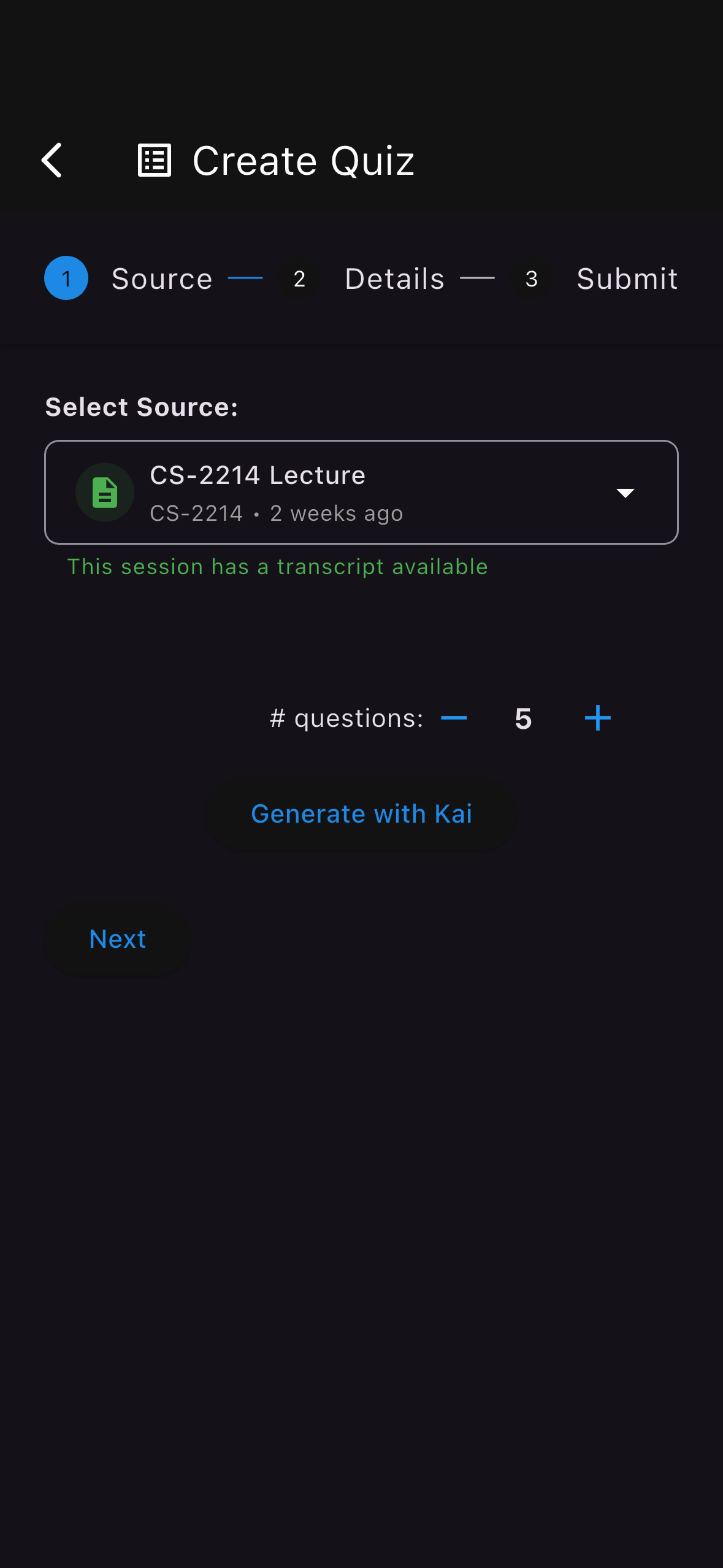

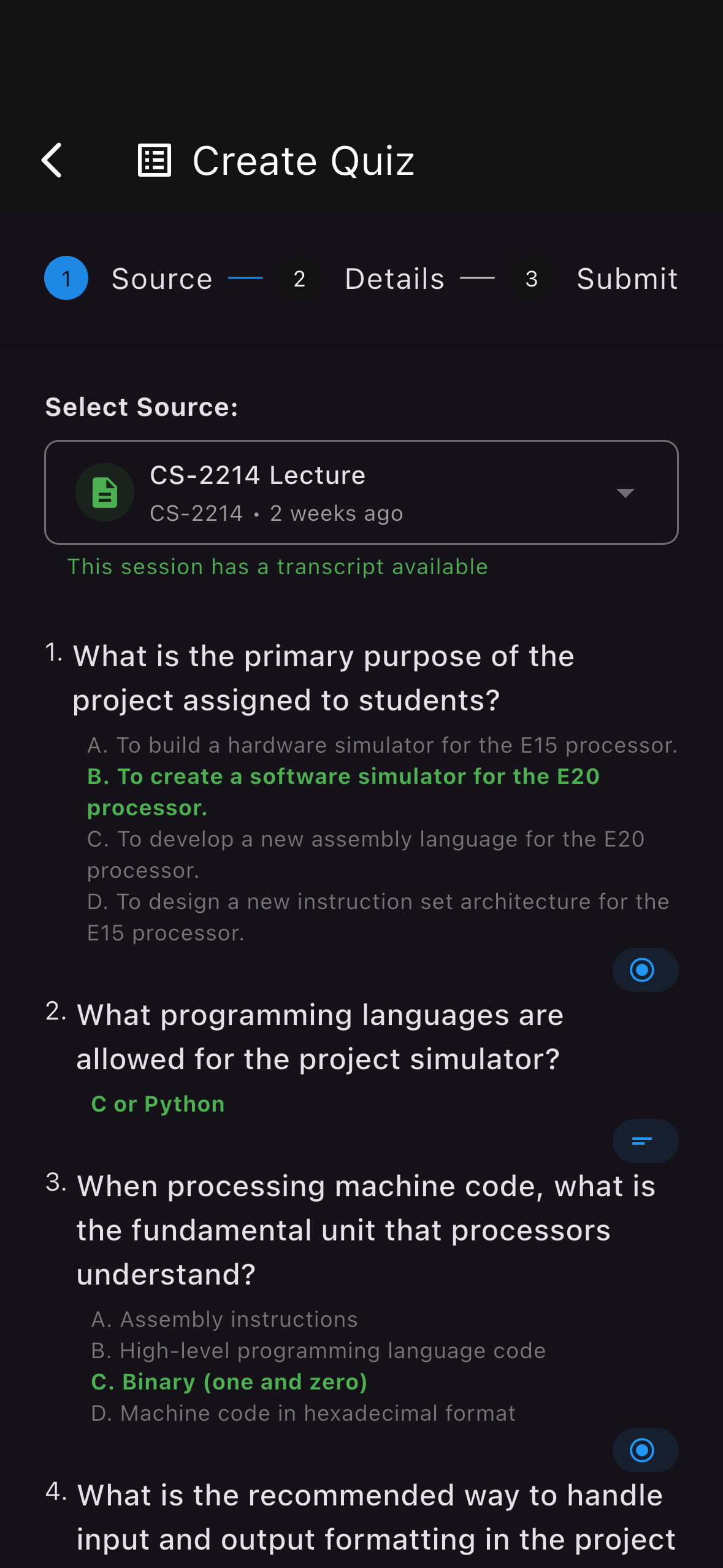

The complete workflow from instructor decision to analyzed results occurs within a compressed timeframe. The following screenshots illustrate the instructor’s experience:

Step 1: Create Quiz Request

Single button initiates quiz generation from lecture content

Step 2: Configure Settings

Optional adjustments to time limit and question types

Step 3: Preview Questions

Auto-generated questions based on recent lecture content

Step 4: Deploy to Students

Push notification sent to all enrolled students

Students receive instant notification:

Student Notification

Push alert appears on all student devices with countdown timer

The default 15-minute window creates urgency while allowing time for thoughtful responses. Students complete the quiz on mobile devices, with answers syncing even when connectivity is intermittent. As responses arrive, the system processes them continuously.

Real-Time Analytics

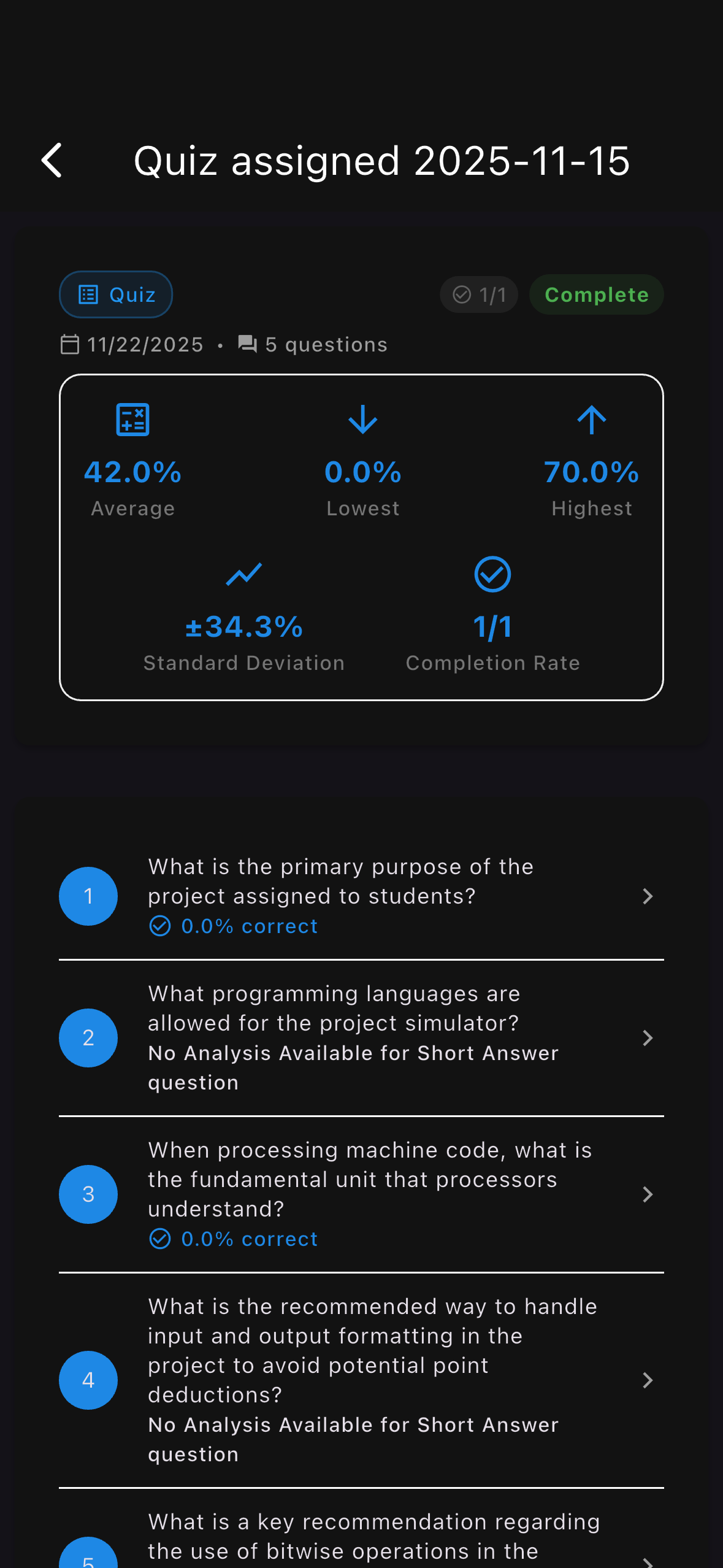

Within the 15-minute quiz window, the instructor dashboard updates with live results. The system tracks completion rates, identifies response patterns, and flags common misconceptions as they emerge. By the time students finish, a comprehensive analysis is ready:

Real-Time Results Dashboard

Live score tracking, pattern recognition, and individual performance metrics

The analytics include several distinct types of insight:

Accuracy distribution: Which questions showed strong comprehension? Where did most students struggle?

Response clustering: For multiple-choice questions, are incorrect answers distributed randomly, or clustering around specific misconceptions?

Time patterns: Did students rush through certain questions? Did others require more time than allocated?

Individual flags: Which specific students showed difficulty across multiple questions, suggesting the need for individual support?

This information arrives while the class session continues or immediately afterward, enabling instructional response while the material remains current.

Pedagogical Value of Automated Generation

The shift from manual to automated quiz creation offers several pedagogical advantages beyond time savings. First, the questions reflect what was actually taught, not what the instructor planned to teach. Lectures often deviate from lesson plans in response to student questions, unexpected conceptual difficulties, or productive tangents. An auto-generated quiz captures this reality, assessing understanding of the actual instruction delivered.

Second, the system can generate quizzes at higher frequency than would be practical with manual creation. An instructor might reasonably create one or two manually crafted assessments per week. With automated generation, brief comprehension checks become feasible after every major topic or concept, providing much finer-grained data on student understanding.

Third, question generation based on recent content reduces the cognitive load on instructors. Rather than mentally tracking which concepts need assessment while simultaneously teaching, explaining, and responding to questions, the instructor can focus entirely on instruction. The quiz generation happens afterward, based on a complete record of what transpired.

Finally, consistency emerges naturally. When the same system generates all questions using the same analysis of lecture content, question difficulty and format remain more stable than with manual creation. This consistency enables meaningful comparison of results across multiple quizzes throughout the semester.

Comprehension Data at Scale

The fundamental challenge of large lecture courses is information asymmetry. An instructor can observe general patterns—confused expressions, frequent questions about a topic, slow response to queries—but lacks detailed data about individual understanding. With 200 students, verbal questions come from perhaps fifteen voices. The other 185 remain largely invisible.

Auto-generated quizzes shift this dynamic. Every student responds to every question, creating a complete dataset of comprehension across the entire class. The system processes this data immediately, identifying not just overall accuracy but patterns of misunderstanding.

Consider a quiz on recursion with three questions: one testing basic definitional knowledge, one requiring application to a novel problem, and one asking students to identify errors in recursive code. Results showing 85% accuracy on definition, 60% on application, and 45% on error identification reveal a clear progression. Students can recognize concepts they’ve been taught but struggle to apply them and struggle more to debug them.

This granularity informs instructional decisions. The gap between conceptual knowledge and application suggests a need for worked examples and practice problems. The larger gap to debugging capability might indicate that students need explicit instruction in error analysis, not just more exposure to correct implementations.

Temporal Patterns and Longitudinal Analysis

When auto-generated quizzes occur regularly throughout a semester, the accumulated data reveals patterns that single assessments cannot. An instructor teaching a computer science course might deploy brief quizzes after every major topic: variables and types, control flow, functions, recursion, data structures, algorithms.

The question formats remain consistent—each quiz includes definitional questions, application problems, and error identification. This consistency enables comparison. If students consistently show 70-80% accuracy on definitions but 40-50% on error identification across multiple topics, that pattern suggests a systematic gap in debugging skills rather than difficulty with specific content.

The system can also track individual student trajectories. If a student shows strong performance early in the semester but declining scores on recent quizzes, that pattern might indicate increasing difficulty, reduced engagement, or personal circumstances affecting performance. Conversely, students showing steady improvement across multiple assessments demonstrate successful knowledge building.

These longitudinal patterns inform not just immediate instruction but course design. Persistent difficulty with certain question types or consistent drops in performance at specific points in the semester can guide restructuring for future course iterations.

Integration with Real-Time Instruction

The 15-minute quiz window creates an interesting pedagogical opportunity. An instructor might deploy a quiz during a natural break—sending students to work on it during the last fifteen minutes of class, during a scheduled break, or as an immediate post-class assessment. The timing affects what becomes possible.

A mid-class quiz can inform the remainder of that session. If results show widespread confusion about a concept just taught, the instructor can address it before students leave. An end-of-class quiz provides data that shapes the next session, allowing the instructor to begin with targeted review of identified difficulties.

The real-time nature of the analysis matters. Traditional homework assignments provide valuable formative data, but that data arrives hours or days after instruction. By then, students have moved on mentally, and the instructor must interrupt new material to circle back. Immediate assessment creates temporal proximity between instruction, assessment, and response.

The Question of Question Quality

Automatically generated questions raise natural concerns about quality and appropriateness. Can a system that has only listened to a lecture generate questions as pedagogically valuable as those crafted by an experienced instructor?

The answer is nuanced. The system generates questions that accurately reflect lecture content and target the concepts actually taught. It maintains consistent difficulty levels and question formats. It creates assessments rapidly enough to enable frequent formative checking. These capabilities have value.

However, the system operates within constraints. It generates questions based on what was said, not on deep understanding of common student misconceptions, the conceptual structure of the discipline, or the specific learning objectives for the course. An expert instructor crafting questions manually might design prompts that specifically target known areas of difficulty, probe the boundaries between related concepts, or assess understanding in ways that require sophisticated domain knowledge.

The optimal approach may involve both automated and manual creation. Auto-generated quizzes provide frequent, low-stakes comprehension checks that would be impractical to create manually. These establish a baseline of understanding and flag areas needing attention. The instructor can then craft more sophisticated assessments—midterms, final exams, major assignments—that probe understanding more deeply.

Data Density and Instructional Response

The compressed timeline from lecture to quiz to analyzed results creates unusually dense information flow. Within a single class period, an instructor can teach new content, assess student comprehension of that content, and receive detailed analysis of understanding patterns across the entire class. This density enables responsive instruction that adjusts quickly to observed difficulties.

Consider two scenarios. In the first, an instructor teaches recursion, assigns homework problems, and discovers two days later—when grading—that most students struggled with base case identification. Addressing this requires re-teaching material that students encountered two days ago and may have already attempted to learn through trial and error.

In the second scenario, the instructor teaches recursion, deploys an auto-generated quiz during class, and receives immediate data showing that 65% of students misunderstand base cases. This information arrives while recursion is still the active topic. The instructor can address the misconception immediately, provide additional examples, and clarify the concept before students leave. The temporal proximity between instruction, assessment, and correction reduces the inefficiency of delayed feedback.

Summary

The capacity to generate contextually relevant quizzes from live lecture content shifts several pedagogical constraints. The cognitive and time costs of creating frequent comprehension checks decrease substantially when question generation is automated. The temporal gap between instruction and assessment narrows when quizzes can be deployed immediately after teaching. The information asymmetry in large courses reduces when every student responds to every question and all responses are analyzed systematically.

These shifts do not eliminate the need for instructor expertise in assessment design, nor do they replace carefully crafted summative evaluations. They do, however, make frequent formative assessment practical at a scale that was previously difficult. The system listens to lectures, generates questions from content actually taught, and provides analyzed results within minutes. Instructors receive detailed comprehension data while material remains current, enabling instructional adjustment based on observed understanding rather than assumption.

Additional Resources

- Comprehensive Documentation: Pop Quiz Workflow Guide

- Video Demonstration: Watch the workflow in action

- Request Access: Join the beta program

- Related Workflows: Feedback workflow for open-ended assessment